Table of Contents

Toggle

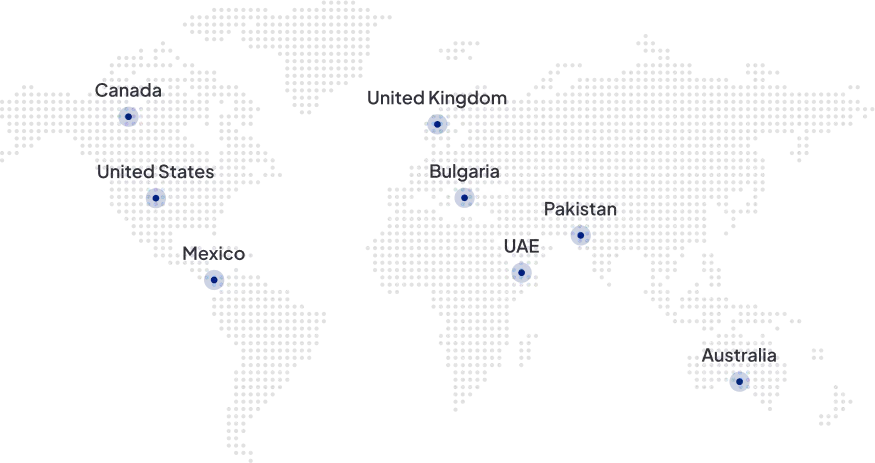

In the rapidly evolving world of data science and engineering, leveraging the right tools and technologies can significantly enhance the functionality and efficiency of your projects. One such powerful combination is integrating Microsoft Fabric with Databricks. This integration offers a plethora of benefits across various industries, including improved data processing speeds and better analytics capabilities.

How Integrating Microsoft Fabric with Databricks Enhances Functionality?

Integrating Microsoft Fabric with Databricks brings together the robust cloud infrastructure of Microsoft with the cutting-edge analytics platform of Databricks. This synergy not only accelerates data processing tasks but also provides a scalable environment for advanced analytics and machine learning models. Industries ranging from finance to healthcare can leverage this integration to process large volumes of data more efficiently, gain real-time insights, and drive innovation.Specific Benefits for Different Industries Include

- Finance: Enhanced ability to detect fraud in real-time and perform complex financial modeling at scale.

- Healthcare: Improved processing of patient data for predictive analytics, leading to better patient outcomes.

- Retail: Ability to analyze customer data and market trends quickly, enabling more personalized shopping experiences.

Steps to Integrate Microsoft Fabric with Databricks

- Set up Azure Databricks Workspace: Begin by creating an Azure Databricks workspace if you haven’t already. This will serve as your primary environment for running analytics and machine learning models.

- Configure Microsoft Fabric: Ensure that Microsoft Fabric is properly configured within your Azure environment. This involves setting up necessary permissions and network settings to allow seamless communication between Microsoft Fabric and Databricks.

- Establish Connectivity: Use Azure Data Factory or a similar service to establish connectivity between Microsoft Fabric and Databricks. This typically involves configuring an integration runtime and specifying the details of your Databricks workspace.

- Deploy and Test: Once everything is configured, deploy a test workload to verify that the integration is working as expected. This could involve running a simple data processing job or executing a machine learning model within Databricks using data sourced from Microsoft Fabric.

Technical Overview of the Integration

Architectural Considerations and Setup Requirements

When integrating Microsoft Fabric with Databricks, it’s important to consider the overall architecture of your data environment. This includes understanding the flow of data between systems, the volume of data being processed, and any specific latency or performance requirements.Key Components Involved in the Integration

- APIs and Connectors: Leveraging APIs and connectors provided by Azure and Databricks is crucial for facilitating the integration. These tools allow for efficient data transfer and task execution between Microsoft Fabric and Databricks.

- Data Lake Storage: Utilizing Azure Data Lake Storage as a repository for raw data that can be processed by Databricks is a common approach. This setup allows for scalable storage and efficient data access.

- Security and Compliance: Ensuring that the integration adheres to security and compliance requirements is paramount. This involves configuring proper access controls, encryption, and monitoring mechanisms.