Table of Contents

ToggleHal Varian, Google’s Chief Economist, wisely said, “The ability to take data—to understand it, process it, extract value from it, visualize it, and communicate it—is going to be a hugely important skill in the next decades.”

The quote above cannot overstate the power of data. Our world today thrives on data, and success is guaranteed for those who can intelligently manipulate it to extract meaningful insights.

A strong data environment is the foundation for a successful business. When it comes to data, it’s not merely about storing it; rather, the world of data consists of much more, such as data management and analytics.

Microsoft Fabric is a comprehensive platform that empowers users to get, create, share, and visualize data using various tools. Its implementation is a critical process that remodels your organization’s information handling.

In this blog, we aim to guide you through the essential concepts of implementing Microsoft Fabric so that you can make the most of your Fabric experience. With the right approach, you’ll uncover Microsoft Fabric’s benefits and features to upgrade your data management.

Accelerate smart decisions with Microsoft Fabric's unified data and AI analytics.

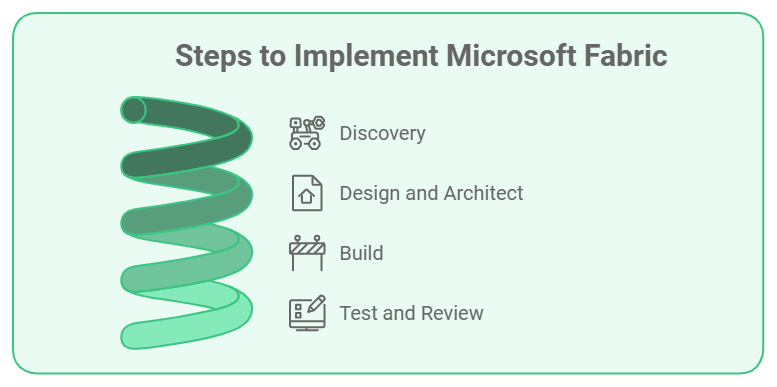

Steps to Implement Microsoft Fabric

Implementing Microsoft Fabric includes a series of meticulously designed stages that ensure that your data environment is strong, secure, and aligned with your business goals.

Let’s wait no further and traverse a detailed breakdown of each stage in the Microsoft Fabric setup.

1. Discovery

The initial step for mastering a successful Microsoft Fabric implementation is the discovery phase, which includes the following:

Conduct Discovery Sessions With Key Stakeholders

Engaging the key stakeholders and getting their say in extracting information is an important step in implementing Microsoft Fabric. This helps you understand their expectations, pain points, and what they hope to get with Microsoft Fabric.

The sessions with major stakeholders are collaborative, and everyone must be assured that they are on the same page about Microsoft Fabric’s features and how they help fulfill organizational needs.

Understand Goals, Challenges, and Aspirations Related to Data and Analytics

Pinpointing out what your organization wants to achieve through implementing Microsoft Fabric will help you manage and direct the project. The usual goals include enhancing data accuracy, speeding up reporting processes, or combining disparate data sources.

Moreover, understanding your challenges, such as technical limitations or data silos, will let you devise a focused strategy for Microsoft Fabric configuration.

Share Findings

After the discovery, you will present a summary of your findings to all stakeholders and set the base for the next phase. This includes documenting your objectives, challenges, and top priorities.

These findings will be a foundation for your Microsoft Fabric setup, helping to manage expectations and clearly define the project’s scope.

2. Design and Architect

After you have a complete understanding of the requirements, you must move on to the design phase:

Build a Long-Term Roadmap for a Modern Data Platform

Defining a clear road map is essential for a successful implementation. A roadmap outlines the immediate steps for the Microsoft Fabric implementation and evaluates its scalability for the future, technology upgrades, and changes in your data strategy.

This plan must include important benchmarks such as timelines, milestones, and key performance indicators (KPIs) for every phase.

Identify Data Sources

You must identify the data sources you intend to integrate so that all important information is included. Data sources include cloud platforms, on-premises databases, IoT devices, and third-party applications. Pinpointing these sources will let you configure them correctly during the Microsoft Fabric setup.

Develop Architecture Documents

After this, prepare a detailed architecture document that will act as the master plan for your Microsoft Fabric configuration. This document should include the data flow, integrations, and how different components interact.

Diagrams and detailed descriptions of the data architecture will help guarantee that everyone involved understands the structure well.

Develop a Custom Implementation Plan

Customize the implementation according to your organization’s specific requirements. Every step must be planned carefully, from initial setup to full deployment. This personalized plan must include specific Microsoft Fabric features to meet your goals.

Outline Security

Data security is a prime concern when data is involved and has become a top priority. Your design must include the best practices for safeguarding data at every stage of the Microsoft Fabric implementation.

This will consist of identity management, data encryption, secure access protocols, and compliance with regulations such as GDPR or HIPAA. The security plan must handle internal and external threats while providing a strategy for managing data access.

3. Build

In the build step, the platform is set up and configured according to your design plan:

Setup the Microsoft Fabric Environment

Now, you’ll start the actual implementation by setting up the environment. This includes configuring the infrastructure, installing all the required software components, and setting up network requirements.

Proper Microsoft Fabric configuration consists of setting up user access controls, defining user roles, and ensuring every team member has the right permissions according to their role in the project.

Bring Data Into OneLake

Integrate and ingest all required data into OneLake, Microsoft’s central data lake service. This involves connecting different data sources, setting up data pipelines, and transforming data for storage.

The aim is to have a centralized, unified source for all data, facilitating data analytics and reporting by employing Microsoft Fabric’s powerful features.

Setup Medallion Architecture Using Data Warehouse(s) and Lakehouse(s)

Use the Medallion Architecture to create structured layers in your data platform. This comprises creating three different layers: the Bronze (raw data), Silver (cleaned and transformed data), and Gold (aggregated and ready-for-analysis data) layer.

Combining Data Warehouses and Lakehouses guarantees that the data is stored efficiently and is always accessible. This step boosts your ability to scale and analyze accurately.

Setup Power BI and Semantic Models

Power BI plays an important role in Microsoft Fabric. You can create solid semantic models to translate raw data into meaningful information. Semantic models (which describe objects in a database and their relationship to one another in their application environment) simplify data exploration by organizing and structuring information in a meaningful and intuitive way.

Setting up these models lets you benefit from Microsoft Fabric features, leading to accurate and meaningful insights.

4. Test and Review

Once the Microsoft Fabric setup is complete, it’s time for validation and refinement:

Validate the Data and Data Quality in the Environment

Testing is the key to ensuring data integrity. This consists of running data validation checks, comparing different datasets with the expected outcomes, and verifying data accuracy and consistency.

Powerful tools such as Azure Data Factory can automate some of these validation tasks. In-depth testing will identify any discrepancies that might arise during the data ingestion process.

Fine-Tune the Solution

Modify and adjust performance, data processing speed, and reporting. Fine-tuning can involve adjusting database configurations, refining data queries, and ensuring that your Power BI dashboards function at their maximum capacity.

This stage can be iterative due to the feedback gained from the testing phase and can also involve end-user training.

Provide Documentation Related to the Setup and Leading Practices

Documentation is important for long-term success. By documenting every step of the Microsoft Fabric configuration, you can provide a reference point for future changes and help with training stakeholders.

The documentation must include detailed instructions on the data setup, best practices for using Microsoft Fabric features, troubleshooting guidelines, and a detailed FAQ section.

Conclusion

Executing a thorough and well-devised Microsoft Fabric implementation can reshape your organization’s approach to data handling. Initiating the process from conducting stakeholder sessions to setting up a secure and efficient environment, every stage is critical for crafting a successful data platform.

A successful implementation will always empower you to milk upon the benefits of Microsoft Fabric, such as simplified analytics and building a scalable data structure. This will always result in a data-driven decision-making environment for your business.

It is always recommended that you consider the professional services available for your implementation through certified partners. By collaborating with experts, you can warrant that your Microsoft Fabric implementation steps are executed flawlessly, leading to long-term success.